I recently built an Elixir SDK for OpenAI APIs for a project I was working on to automatically fix and correct Japanese grammar (https://fixmyjp.d.sh). The SDK is actually fully auto-generated with metaprogramming which is something I wanted to do in Elixir for a while, but that’s not the topic of today’s post.

Today, let’s use that SDK for something fun and build a smol ChatGPT service for your shell from scratch, in true Elixir fashion!

The end result will be an ai shell command that is usable from wherever we want, to send messages to a distributed gptserver node for interacting with ChatGPT, that can either be on the same computer, or anywhere on the same network

Quick refresher: GenServers, and how state in Elixir is implemented

Elixir is an immutable functional programming language, meaning you can’t mutate things. If you do

a = 1

a = a + 1

you are not mutating a to be a+1, instead you create a new a with the value of a+1. That means you can’t do things like this in Elixir:

a = %{}

a["foo"] = "bar"

instead, you would do:

a = %{}

a = Map.put(a, "foo", "bar")

again, creating a new a with a copy of the previous a, but one item added. The old a is not mutated, it still exists until the garbage collector gets rid of it.

So how tf do you actually keep any state across different modules then? From the sounds of it, you would need to constantly do something with a value, you can’t just create an object that different parts of the code can mutate and share.

In Elixir/Erlang-land, instead of having a shared object/container somewhere, we use processes managed within the BEAM VM, and the most common way to define those processes is GenServer.

GenServer is a behaviour that looks like this:

defmodule Foo do

use GenServer

# stuff that's running inside a separate process

@impl true

def init(val), do: {:ok, val}

@impl true

def handle_call({:add, i}, _from, sum) do

{:reply, sum+i, sum+i}

end

# stuff that's running inside the main process

def add(pid, num) do

GenServer.call(pid, {:add, num})

end

end

foo = GenServer.start_link(Foo, 0)

Foo.add(foo, 2) # 0 + 2

Foo.add(foo, 3) # 2 + 3

To hold state, we effectively created a new process whose whole purpose is to hold a value in memory, then listen for a message :add, to then add whatever got passed to create a new value (remember? we can’t mutate things) to hold in memory and start listening for new messages again. Imagine it as a (very simplified representation):

function add(startValue) {

add(startValue + waitForUserInput()); // yay, no variables

}

Level 1: ChatGPT in a GenServer

Okay now that we remembered how to handle state in Elixir, we know what to do to get a ChatGPT-like system going: Wait for user input -> append to messages so far -> send to OpenAI -> output -> wait for user input again

Let’s summon a new mix project:

mix new mixgpt

add my ex_openai library as deps to mix.exs:

defp deps do

[

{:ex_openai, ">= 1.0.2"}

]

end

(follow the instructions for configuration with an API key)

and conjure up a basic GenServer with a new {:msg, m} message listener:

defmodule Mixgpt do

use GenServer

@impl true

def init(_opts) do

{:ok, []} # start value is empty list

end

@impl true

def handle_call({:msg, m}, _from, msgs) do

end

end

To make our life easier, we’ll also add a start_link function to start the thing:

def start_link(_opts) do

GenServer.start_link(__MODULE__, [], name: :gptserver)

end

Notice the name: :gptserver argument here - we’re giving the GenServer a unique name so that we don’t need to worry about remembering its PID somewhere.

To send a message to this new genserver, we’ll also create a new send():

def send(msg) do

GenServer.call(:gptserver, {:msg, msg})

end

thanks to name: :gptserver we already know how to reach this instance, so we no longer need the PID to be passed. Now, all we have to do is Mixgpt.send "hi!"

In the actual handle_call, what we have to do is: construct a new OpenAI ChatGPT message with the passed message, send it to the OpenAI API, and add whatever it returned to the internal state of this server (remember from above? how to handle state). Something like this:

defp new_msg(m) do

%ExOpenAI.Components.ChatCompletionRequestMessage{

content: m,

role: :user,

name: "user"

}

end

@impl true

def handle_call({:msg, m}, _from, msgs) do

# create temporary "msgs" with existing list + the new message from the user

# effectively appending the new message to the existing messages

with msgs <- msgs ++ [new_msg(m)] do

# call OpenAI ChatGPT API with the new msgs list

case ExOpenAI.Chat.create_chat_completion(msgs, "gpt-3.5-turbo") do

{:ok, res} ->

# return the content of the result, add it to the msgs list and continue the GenServer loop with {:reply}

first = List.first(res.choices)

# second value is what's returned, third value is the new state of this server

{:reply, first.message.content, msgs ++ [first.message]}

{:error, reason} ->

{:error, reason}

end

end

end

The cool thing about Elixir and other functional languages is that they usually come with a REPL, or a shell that you can use to interact with different bits and pieces of your program. In Elixir, that’s iex. Run iex -S mix, wait until it starts, then try to interact with our new GenServer:

iex> Mixgpt.start_link(nil)

{:ok, #PID<0.280.0>}

iex> Mixgpt.send "Hi!"

"Hello! How can I assist you today?"

iex> Mixgpt.send "tell me a joke in 5 words"

"\"Why did the tomato blush?\""

Cool, now we already got our own pocket ChatGPT that we can access through mix, that was pretty quick, wasn’t it? Let’s make it a bit more automatic and close the loop. To wait for user input, we can use IO.gets, let’s use that in a new function to directly ask for the next message:

def wait_for_input_and_send() do

IO.gets("> ")

|> send

|> IO.puts() # print the message out to have it nicely formatted

wait_for_input_and_send() # recurse

end

Type recompile into the iex shell to recompile the project and try again:

iex> Mixgpt.wait_for_input_and_send

> Hi

Hello, how can I assist you today?

> Do you like Elixir?

As an AI language model, I don't have personal preferences or feelings. However, I can tell you that Elixir is a popular programming language among developers and has some unique features that make it stand out, such as its scalability, fault tolerance, and ease of use for distributed systems.

> what was the first message I sent you?

The first message you sent me was "hi".

>

Level 2: Distributed ChatGPT service

The example above is easy to do in any programming language, but let’s go one step further and do something cool that you can do beautifully in Elixir and Erlang, but not as easily in other programming languages: Let’s turn this thing into a proper distributed system!

As a reminder, our mini chatgpt is running inside a server! We did this because that’s how state is handled in Elixir. Even though we created the interface so that we can easily just call send("why is the sky green?"), on the back it still sends a new message to this gpt-server, then waits until it responds. It just does this so transparently that we don’t even notice.

But what’s even cooler: GenServers can already be accessed from other nodes within same BEAM cluster, so we already have something that is usable over a network! Let’s take a look at how to do that, with only a little bit of black magic.

Close the iex shell we had open, and create a new one, but this time with the --name parameter:

iex --name [email protected] -S mix

iex> Mixgpt.start_link

{:ok, #PID<0.276.0>}

--name tells elixir to start a distributed node with the given name, on the given network. So we created a distributed node gptserver on the network 127.0.0.1

In a new terminal window, start another iex session, this time without -S mix, but still with --name:

iex --name [email protected]

To now execute things on another cluster node, Elixir provides the Node.spawn_link function among other things. With this you could do stuff like:

iex> Node.spawn_link(:"[email protected]", fn -> IO.puts("hi there, I'm running on another node") end)

But we already have a GenServer running, so no need for that and just hit the GenServer directly. This is also very easy to do, just by changing the GenServer.call(:gptserver, {:msg, msg}) call to also include the node information where :gptserver is running: {:gptserver, :"[email protected]"}:

iex> GenServer.call({:gptserver, :"[email protected]"}, {:msg, "Helloo, anyone there?"})

"Hello! Yes, I'm here. How can I assist you?"

Do you realize what just happened? Our completely separate iex shells just connected to each other to send a message to the GenServer running under the :gptserver name, which then hits the OpenAI API, returns the data, and relays it back to the other iex shell!

We just created a distributed ChatGPT service on our local network (well, localhost), all without any extra setup required! This even works across machines, as long as the nodes are running reachable on the same network :)

Final step: A global shell command

You can already guess what comes next. We can run Elixir code directly with elixir -e "IO.puts \"Hi\"". The last lego piece missing is hooking this up to a global shell command, so that we can access our mini-ChatGPT whenever we need through a terminal, while retaining previous context.

To package it all up into a one-liner:

$ elixir --name [email protected] -e "GenServer.call({:gptserver, :'[email protected]'}, {:msg, \"hi\"}) |> IO.puts"

Hello! How can I assist you today?

That’s still the same call as before, except we’re running it through elixir -e "", and pipe the result into IO.puts to write it to the shell. This is still using the same GenServer that’s still running (unless you shut it down), and it still retains the same message history from earlier.

Lastly, package into a bash function:

ai() { elixir --name [email protected] -e "GenServer.call({:gptserver, :'[email protected]'}, {:msg, Enum.join(System.argv, \" \")}) |> IO.puts"; $@; };

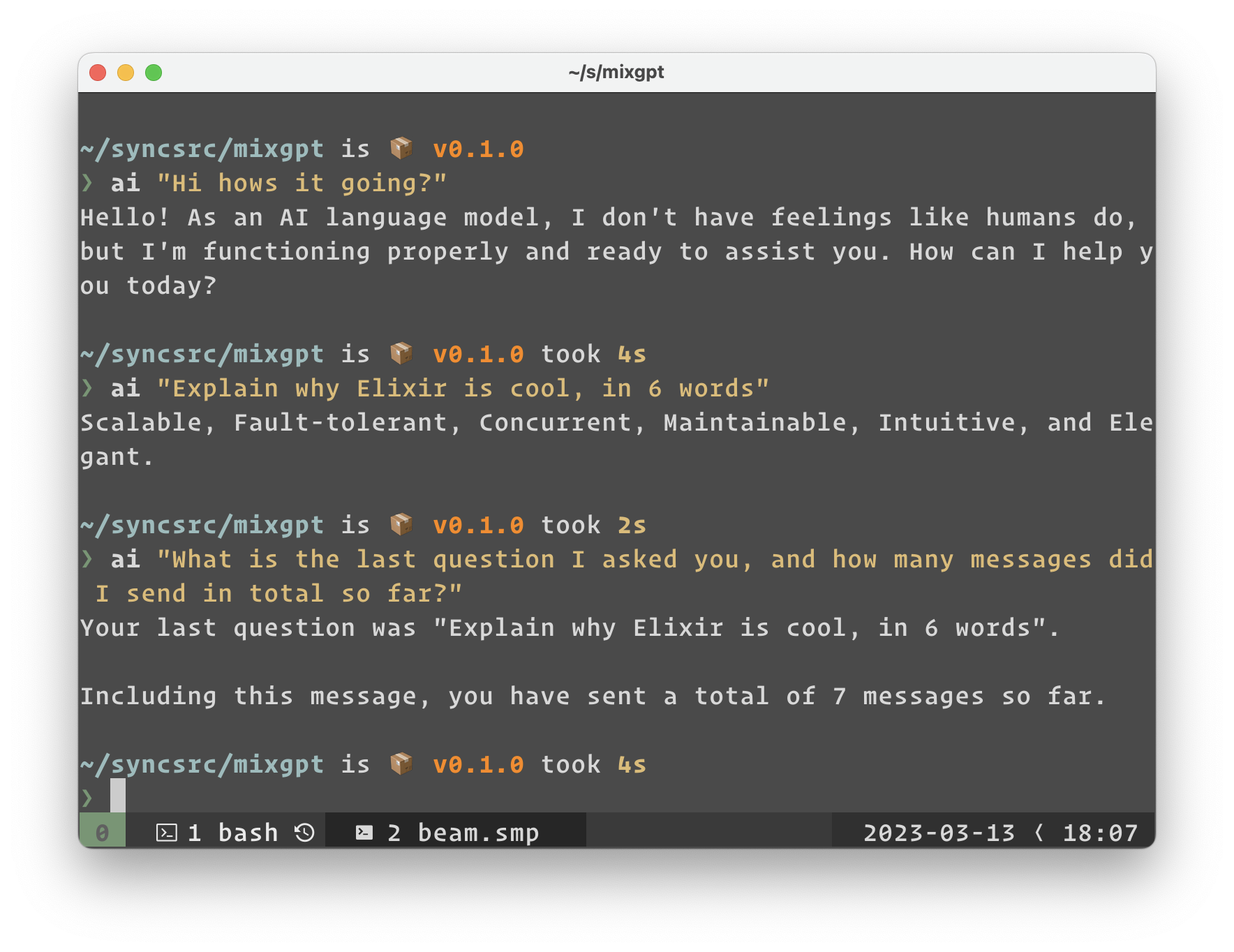

Tadaa! We now have a system-wide (or network-wide) mini-ChatGPT that is fully stateful

$ ai is the sky blue?

Yes, on clear days, the daytime sky typically appears blue due to the scattering of sunlight by Earth's atmosphere.

$ ai what is the last message I sent you?

Your last message was "is the sky blue?"

We could easily put this on a home server and have it available across all of our machines without any extra setup if we really wanted to

Of course, this is still super barebones and in reality, we’d do a few more things:

- A Supervisor to handle crashes

- Changing

:gptserverto be global, so we don’t need to know the hostname - Some way to reset, or clear the message history to not have messages endlessly pile up

- Better error handling

among others